I've mentioned mapreduce a couple of time.

But what is a mapreduce job? Well, a mapreduce job (for this I won't resort to the documentation, hope it is clear and correct) is a process that consists of two parts: the Map, and the Reduce.

The Map part, takes the data (in a way that you specify) and breaks it into individual pieces (more on this later).

The Reduce part, takes all the individual pieces and groups them into meaningful information / results.

Unclear? Well, to simplify (for those familiar with SQL), the reduce basically works like a Group By clause, and the Reducer code can do SUM, AVG, COUNT and so forth, as well as some greater complexity operations.

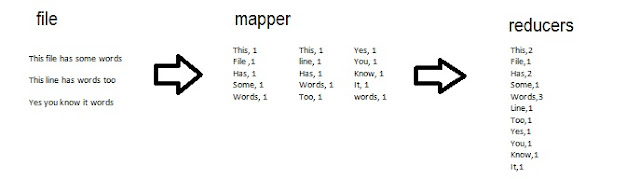

Specific example (Wordcount) (with another file):

Well, on the upper picture, you first have a file with some words. Then, the Mapper (Map program) breaks the file into words, and associates a 1 with the word and creates several touples.

After that, the Reducer (Reduce program) checks all the individual touples, and groups them by the word, summing all the 1s.

This for a simple WordCount MapReduce task.

To run the WordCount example (that is shipped with hadoop as a sample) just run (we will use the file discussed on http://pinelasgarden.blogspot.pt/2012/05/en-big-data-helper-part-3-loading-data.html):

|

| step 1 and 2 (click to enlarge) |

- cd /usr/lib/hadoop-0.20 (to find the examples.jar)

- hadoop jar hadoop-0.20.2-cdh3u0-examples.jar wordcount sapwc sap.wc.out (to run the mapreduce job)

- hadoop dfs -getmerge sap.wc.out sapOUT (to get the file out)

|

| step 3 (click to enlarge) |

Thank you.

p.s. - to sort this output use

$ sort -rnk 2 sapOUT

All done! congrats! your first map reduce job.

Lesson 6 (I think it's 6) will show you how to write your own mapreduce functions (a basic example).

Thank you.

-- ====================

Other Tutorial Links (to remove any doubts):

http://pinelasgarden.blogspot.pt/2012/04/en-big-data-helper-part-1-concepts.html

http://pinelasgarden.blogspot.pt/2012/04/en-big-data-helper-part-2-getting.html

http://pinelasgarden.blogspot.pt/2012/05/en-big-data-helper-part-3-loading-data.html

http://pinelasgarden.blogspot.pt/2012/05/en-big-data-helper-part-4-pig.html

p.s. - to sort this output use

$ sort -rnk 2 sapOUT

All done! congrats! your first map reduce job.

Lesson 6 (I think it's 6) will show you how to write your own mapreduce functions (a basic example).

Thank you.

-- ====================

Other Tutorial Links (to remove any doubts):

http://pinelasgarden.blogspot.pt/2012/04/en-big-data-helper-part-1-concepts.html

http://pinelasgarden.blogspot.pt/2012/04/en-big-data-helper-part-2-getting.html

http://pinelasgarden.blogspot.pt/2012/05/en-big-data-helper-part-3-loading-data.html

http://pinelasgarden.blogspot.pt/2012/05/en-big-data-helper-part-4-pig.html

Sem comentários:

Enviar um comentário